Introduction to Computers, the Internet and Java

Rafael García Cabrera [IES Virgen del Carmen]

September 2018

1 Reference

1.1 Book

1.2 Sample Chapter download

Chapter 1: Introduction to Computers, the Internet and Java

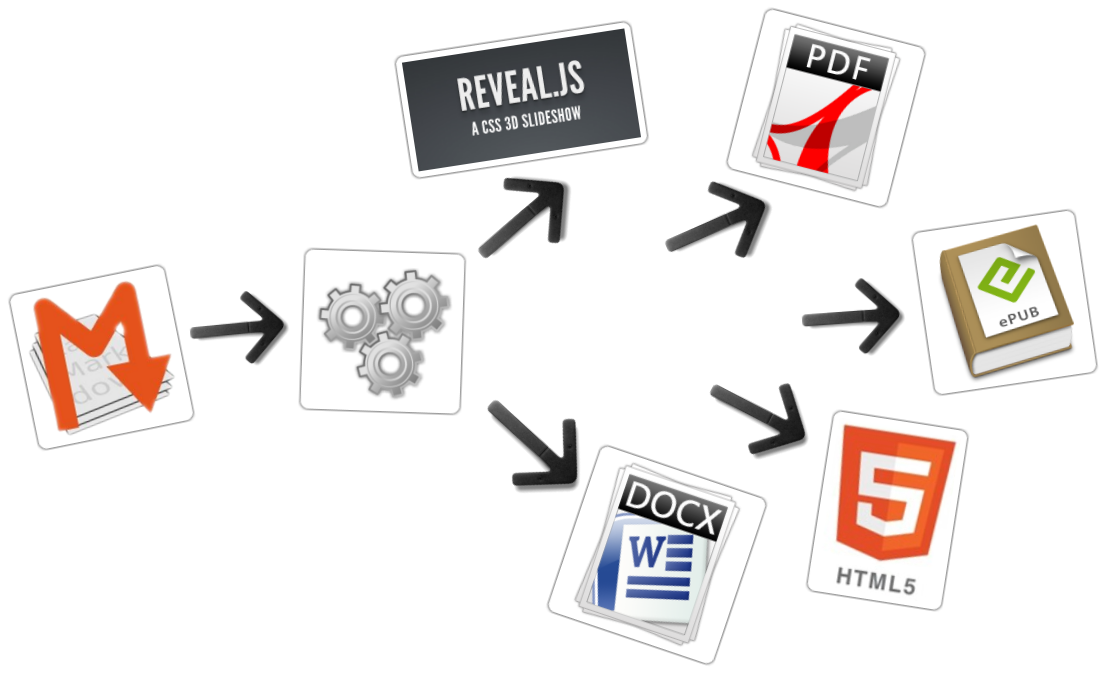

1.3 Slide Presentation

Made with MarkdownSlides https://github.com/asanzdiego/markdownslides: a script to create slides from MD files.

The program source code are licensed under a GPL 3.0

1.4 MarkdownSlides

2 Introduction

2.1 Java (I)

- Java is one of the world’s most widely used computer programming language, TIOBE Index

- You’ll write instructions commanding computers to perform tasks

- Software (the instructions you write) controls hardware (computers)

- OOP, Object-oriented programming, today’s key programming methodology

2.2 Java (II)

Java supported this programming paradigms:

- procedural programming

- object-oriented programming

- generic programming

- functional programming with lambdas and streams

2.3 WORA

Write once, run anywhere

2.4 Java Editions

Java is for developing cross-platform, general-purpose applications.

- JSE (Java Standard Edition): contains the capabilities needed to develop desktop and server applications.

- JEE (Java Enterprise Edition): is geared toward developing large-scale, distributed networking applications and web-based applications.

- JME (Java Micro Edition): a subset of Java SE geared toward developing applications for resource-constrained embedded devices, such as smartwatches, television set-top boxes…

2.5 Hello World

public class HelloWorld {

public static void main(String[] args) {

System.out.println("Hello World");

}

}3 Hardware and Software

3.1 Moore’s Law

- For many decades, hardware costs have fallen rapidly.

- Every year or two, the capacities of computers have approximately doubled inexpensively.

- Amount of memory and secondary storage, processor speeds, communications bandwidth.

Gordon Moore, co-founder of Intel

3.2 Video: 50 Years of Moore’s Law

4 Computer Organization

4.1 Logical Units

- Input unit

- Output unit

- Memory unit

- Arithmetic and logic unit (ALU)

- Central processing unit (CPU)

- Secondary storage unit

4.2 Von Neumann architecture

4.3 Input Unit

This “receiving” section obtains information (data and computer programs) from input devices and places it at the disposal of the other units for processing.

- keyboard

- touch screen

- mouse

- game controllers

- voice commands

- scanning images and bar codes, …

4.4 Output Unit

This “shipping” section takes information the computer has processed and places it on various output devices to make it available for use outside the computer.

- screen

- printer

- secondary storage devices:

- solid-state drives (SSDs)

- hard drives (HD)

- DVD drives and USB flash drives

- virtual reality devices, …

4.5 Memory unit

Memory, Primary Memory or RAM (Random Access Memory).

This rapid-access, relatively low-capacity “warehouse” section retains information that has been entered through the input unit, making it immediately available for processing when needed. The memory unit also retains processed information until it can be placed on output devices by the output unit. Information in the memory unit is volatile (lost when the computer’s power is turned off).

4.6 ALU

The Arithmetic and Logic Unit performs calculations, such as addition, subtraction, multiplication and division. It also contains the decision mechanisms that allow the computer, for example, to compare two items from the memory unit to determine whether they’re equal.

In today’s systems, the ALU is implemented as part of the next logical unit, the CPU.

4.7 CPU

The Central Processing Unit coordinates and supervises the operation of the other sections. The CPU tells the input unit when information should be read into the memory unit, tells the ALU when information from the memory unit should be used in calculations and tells the output unit when to send information from the memory unit to certain output devices.

A multicore processor implements multiple processors on a single integrated-circuit chip and, hence, can perform many operations simultaneously.

4.8 Secondary storage unit (I)

This is the long-term, high-capacity “warehousing” section.

Programs or data not actively being used by the other units normally are placed on secondary storage devices (e.g., your hard drive) until they’re again needed.

Information on secondary storage devices is persistent (it’s preserved even when the computer’s power is turned off).

4.9 Secondary storage unit (II)

Secondary storage information takes much longer to access than information in primary memory, but its cost per unit is much less.

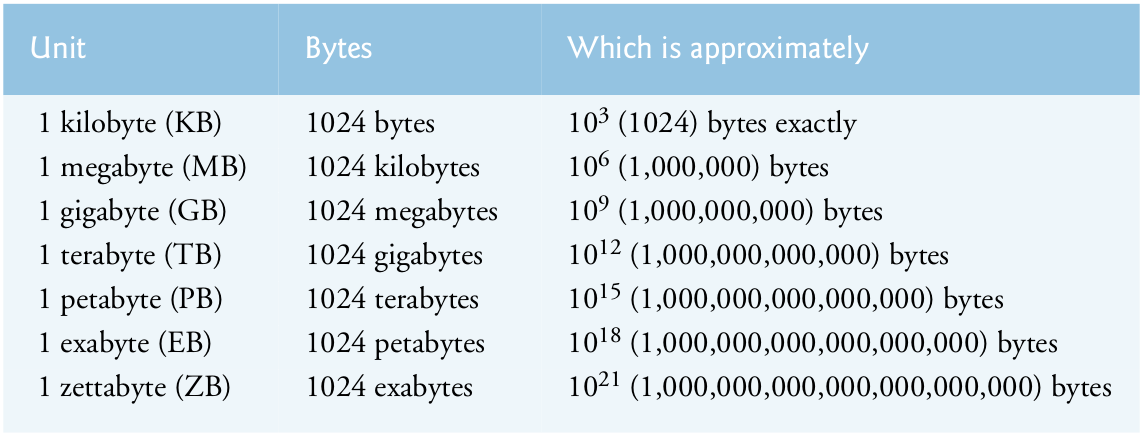

Examples of secondary storage devices include solid-state drives (SSDs), hard drives, DVD drives and USB flash drives, some of which can hold over 2 TB (TB stands for terabytes).

4.10 Byte measurements

5 Data Hierarchy

5.1 Bits

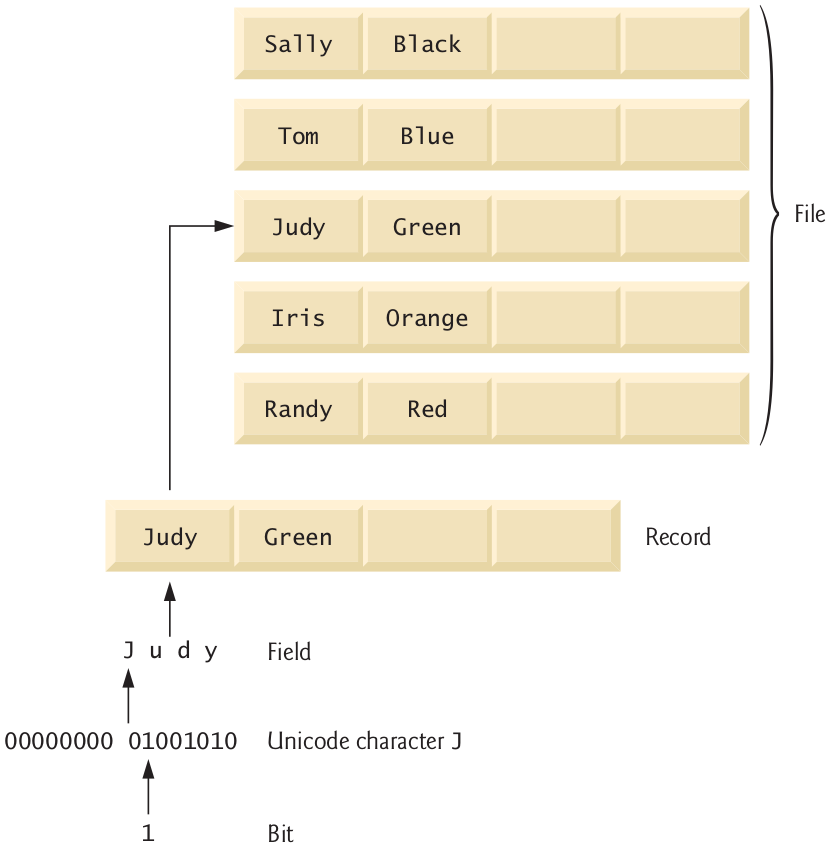

A bit (short for “binary digit”) is the smallest data item in a computer (take value 0 or 1).

Data items processed by computers form a data hierarchy that becomes larger and more complex in structure as we progress from the simplest data items (bits) to richer ones, such as characters and fields.

It’s tedious for people to work with data in the low-level form of bits. Instead, they prefer to work with decimal digits (0-9), letters (A-Z and a-z), and special symbols (e.g., $, @, %, &, *, (, ), –, +, ", :, ? and /).

5.2 Characters (I)

Digits, letters and special symbols are known as characters.

Computer’s character set: all the characters used to write programs and represent data items.

Computers process only 1s and 0s, so a computer’s character set represents every character as a pattern of 1s and 0s.

5.3 Characters (II)

Java uses Unicode characters that are composed of one, two or four bytes (8, 16 or 32 bits).

Unicode contains characters for many of the world’s languages

ASCII (American Standard Code for Information Interchange) character set is the popular subset of Unicode that represents uppercase and lowercase letters, digits and some common special characters.

5.4 Fields

Just as characters are composed of bits, fields are composed of characters or bytes.

A field is a group of characters or bytes that conveys meaning. For example, a field consisting of uppercase and lowercase letters can be used to represent a person’s name, and a field cosisting of decimal digits could represent a person’s age.

5.5 Records (I)

A record is a group of related fields (implemented as a class in Java).

Example: payroll system. The record for an employee might consist of the following fields (possible types for these fields are shown in parentheses):

- Employee identification number (a whole number)

- Name (a string of characters)

- Address (a string of characters)

- Hourly pay rate (a number with a decimal point)

5.6 Records (II)

In the preceding example, all the fields belong to the same employee. A company might have many employees and a payroll record for each.

public class Employee {

private int idEmployee;

private String name;

private String address;

private double hourlyPayRate;

. . .

}5.7 Files

A file is a group of related records.

More generally, a file contains arbitrary data in arbitrary formats. In some operating systems, a file is viewed simply as a sequence of bytes. Any organization of the bytes in a file, such as organizing the data into records, is a view created by the application programmer.

It’s not unusual for an organization to have many files, some containing billions, or even trillions, of characters of information.

5.8 Database

A database is a collection of data organized for easy access and manipulation.

The most popular model is the relational database, in which data is stored in simple tables. A table includes records and fields.

5.9 Big Data

The amount of data being produced worldwide is enormous and growing quickly.

Big data applications deal with massive amounts of data and this field is growing quickly, creating lots of opportunity for software developers.

6 Machine Languages, Assembly Languages and High-Level Languages

6.1 Types of programming languages

Programmers write instructions in various programming languages, some directly understandable by computers and others requiring intermediate translation steps. Hundreds of such languages are in use today. These may be divided into three general types:

- Machine languages

- Assembly languages

- High-level languages

6.2 Machine Languages

Any computer can directly understand only its own machine language, defined by its hardware design.

Consist of strings of numbers (ultimately reduced to 1s and 0s) that instruct computers to perform their most elementary operations one at a time.

Machine dependent (a particular machine language can be used on only one type of computer)

6.3 Assembly Languages and Assemblers

Programming in machine language was simply too slow and tedious for most programmers.

Programmers began using English-like abbreviations to represent elementary operations. These abbreviations formed the basis of assembly languages.

Assemblers (translator programs) were developed to convert early assembly-language programs to machine language at computer speeds.

6.4 High-Level Languages and Compilers

To speed the programming process, high-level languages were developed in which single statements could be written to accomplish substantial tasks.

High-level languages allow you to write instructions that look almost like everyday English and contain commonly used mathematical notations.

Compilers convert high-level language programs into machine language. Grace Hopper invented the first compiler in the early 1950s.

6.5 High-Level Languages and Interpreters

Compiling a large high-level language program into machine language can take considerable computer time.

Interpreter programs, developed to execute high-level language programs directly, avoid the delay of compilation, although they run slower than compiled programs.

Java uses a clever performance-tuned mixture of compilation and interpretation to run programs.

Java is the world’s most widely used high-level programming language.

7 Introduction to Object Technology

7.1 Objects (I)

Objects, or more precisely, the classes objects come from, are essentially reusable software components.

There are date objects, time objects, audio objects, video objects, automobile objects, people objects, etc.

Almost any noun can be reasonably represented as a software object in terms of attributes (e.g., name, color and size) and behaviors (e.g., calculating, moving and communicating).

7.2 Objects (II)

Using a modular, object-oriented design and implementation approach can make software-development groups much more productive than was possible with earlier popular techniques like “structured programming”.

Object-oriented programs are often easier to understand, correct and modify.

7.3 Automobile as an Object (I)

A simple analogy: Suppose you want to drive a car and make it go faster by pressing its accelerator pedal.

Before you can drive a car, someone has to design it.

A car typically begins as engineering drawings, similar to the blueprints that describe the design of a house.

These drawings include the design for an accelerator pedal.

7.4 Automobile as an Object (II)

Pedal hides from the driver the complex mechanisms that actually make the car go faster, just as the brake pedal “hides” the mechanisms that slow the car, and the steering wheel “hides” the mechanisms that turn the car.

This enables people with little or no knowledge of how engines, braking and steering mechanisms work to drive a car easily.

7.5 Automobile as an Object (III)

Just as you cannot cook meals in the kitchen of a blueprint, you cannot drive a car’s engineering drawings.

Before you can drive a car, it must be built from the engineering drawings that describe it.

A completed car has an actual accelerator pedal to make it go faster, but even that’s not enough—the car won’t accelerate on its own (hopefully!), so the driver must press the pedal to accelerate the car.

7.6 Methods and Classes (I)

Let’s use the car example to introduce some key object-oriented programming concepts.

- Performing a task in a program requires a method.

- The method houses the program statements that actually perform its tasks.

- The method hides these statements from its user, just as the accelerator pedal of a car hides from the driver the mechanisms of making the car go faster.

7.7 Methods and Classes (II)

- In Java, we create a program unit called a class to house the set of methods that perform the class’s tasks.

- A class is similar in concept to a car’s engineering drawings, which house the design of an accelerator pedal, steering wheel, and so on.

7.8 Instantiation

Just as someone has to build a car from its engineering drawings before you can actually drive a car, you must build an object of a class before a program can perform the tasks that the class’s methods define.

The process of doing this is called instantiation.

An object is then referred to as an instance of its class.

7.9 Reuse (I)

- Just as a car’s engineering drawings can be reused many times to build many cars, you can reuse a class many times to build many objects.

- Reuse of existing classes when building new classes and programs saves time and effort.

- Reuse also helps you build more reliable and effective systems, because existing classes and components often have undergone extensive testing, debugging and performance tuning.

7.10 Reuse (II)

- Just as the notion of interchangeable parts was crucial to the Industrial Revolution, reusable classes are crucial to the software revolution that has been spurred by object technology.

Use a building-block approach to creating your programs. Avoid reinventing the wheel, use existing high-quality pieces wherever possible. This software reuse is a key benefit of object-oriented programming.

7.11 Messages and Method Calls

- When you drive a car, pressing its gas pedal sends a message to the car to perform a task, that is, to go faster.

- Similarly, you send messages to an object.

- Each message is implemented as a method call that tells a method of the object to perform its task.

7.12 Attributes and Instance Variables (I)

- A car has attributes (color, number of doors, amount of gas in its tank, its current speed, …)

- The car’s attributes are represented as part of its design in its engineering diagrams.

- Every car maintains its own attributes.

- For example, each car knows how much gas is in its own gas tank, but not how much is in the tanks of other cars.

7.13 Attributes and Instance Variables (II)

- An object has attributes that it carries along as it’s used in a program.

- These attributes are specified as part of the object’s class.

- For example, a bank-account object has a balance attribute that represents the amount of money in the account. Each bank-account object knows the balance in the account it represents, but not the balances of the other accounts in the bank.

- Attributes are specified by the class’s instance variables.

7.14 Encapsulation and Information Hiding

- Classes (and their objects) encapsulate, i.e., encase, their attributes and methods. A class’s (and its object’s) attributes and methods are intimately related.

- Objects may communicate with one another, but they’re normally not allowed to know how other objects are implemented, implementation details can be hidden within the objects themselves.

- This information hiding, as we’ll see, is crucial to good software engineering.

7.15 Inheritance

A new class of objects can be created conveniently by Inheritance, the new class (called the subclass) starts with the characteristics of an existing class (called the superclass), possibly customizing them and adding unique characteristics of its own.

In our car analogy, an object of class “convertible” certainly is an object of the more general class “automobile”, but more specifically, the roof can be raised or lowered.

7.16 Interfaces (I)

Java also supports interfaces, collections of related methods that typically enable you to tell objects what to do, but not how to do it.

A “basic-driving capabilities” interface consisting of a steering wheel, an accelerator pedal and a brake pedal would enable a driver to tell the car what to do.

Once you know how to use this interface for turning, accelerating and braking, you can drive many types of cars, even though manufacturers may implement these systems differently.

7.17 Interfaces (II)

A class implements zero or more interfaces, each of which can have one or more methods, just as a car implements separate interfaces for basic driving functions, controlling the radio, controlling the heating and air conditioning systems.

Just as car manufacturers implement capabilities differently, classes may implement an interface’s methods differently.

7.18 Object-Oriented Analysis and Design (I)

For projects so large and complex, you should not simply sit down and start writing programs.

- To create the best solutions, you should follow a detailed analysis process:

- for determining your project’s requirements (defining what the system is supposed to do) and

- developing a design that satisfies them (specifying how the system should do it).

7.19 Object-Oriented Analysis and Design (II)

- Carefully review the design (and have your design reviewed by other software professionals) before writing any code.

Analyzing and designing your system from an object-oriented point of view, it’s called an object-oriented analysis-and-design (OOAD) process.

- Object-oriented programming (OOP), like Java, allows you to implement an object-oriented design as a working system.

7.20 UML (Unified Modeling Language)

The Unified Modeling Language (UML) is now the most widely used graphical scheme for modeling object-oriented systems.

8 Operating Systems

8.1 What is an Operating System?

Operating systems are software systems that make using computers more convenient for users, application developers and system administrators.

- Provide services that allow each application to execute safely, efficiently and concurrently (i.e., in parallel) with other applications.

- The software that contains the core components of the operating system is called the kernel.

8.2 Popular Operating Systems

Popular desktop operating systems include Linux, Windows and macOS (formerly called OS X)

The most popular mobile operating systems used in smartphones and tablets are Google’s Android and Apple’s iOS (for iPhone, iPad and iPod Touch devices)

8.3 Windows (I)

Microsoft developed (mid-1980s) the Windows operating system, consisting of a graphical user interface (GUI) built on top of DOS (Disk Operating System)—an enormously popular personal-computer operating system that users interacted with by typing commands.

Windows borrowed from many concepts (such as icons, menus and windows) developed by Xerox PARC and popularized by early Apple Macintosh operating systems.

8.4 Windows (II)

Windows 10 is Microsoft’s latest operating system—its features include enhancements to the Start menu and user interface, Cortana personal assistant for voice interactions, Action Center for receiving notifications, Microsoft’s new Edge web browser, and more.

Is a proprietary operating system—it’s controlled by Microsoft exclusively.

By far the world’s most widely used desktop operating system.

8.5 Linux (I)

Linux operating system is perhaps the greatest success of the open-source movement (GNU/Linux naming controversy)

Open-source software departs from the proprietary software development style that dominated software’s early years.

With open-source development, individuals and companies contribute their efforts in developing, maintaining and evolving software in exchange for the right to use that software for their own purposes, typically at no charge.

8.6 Linux (II)

Open-source code is often scrutinized by a much larger audience than proprietary software, so errors often get removed faster.

The Linux kernel is the core of the most popular open-source, freely distributed, fullfeatured operating system.

It’s developed by a loosely organized team of volunteers.

Linux has become extremely popular on servers and in embedded systems, such as Google’s Android-based smartphones.

8.7 Apple’s macOS and iOS

Apple, founded in 1976 by Steve Jobs and Steve Wozniak, quickly became a leader in personal computing.

Apple’s proprietary operating system, iOS, is derived from Apple’s macOS and is used in the iPhone, iPad, iPod Touch, Apple Watch and Apple TV devices.

In 2014, Apple introduced its new Swift programming language, which became open source in 2015. The iOS app-development community is shifting from Objective-C to Swift.

8.8 Google’s Android

Android is based on the Linux kernel and Java.

Android apps can also be developed in C++ and C.

One benefit of developing Android apps is the openness of the platform. The operating system is open source and free.

Developed by Android, Inc., which was acquired by Google in 2005.

9 Programming Languages

9.1 Some programming languages (I)

9.2 Some programming languages (II)

9.3 Java (I)

Microprocessors have had a profound impact in intelligent consumer-electronic devices, including the recent explosion in the “Internet of Things”.

Sun Microsystems in 1991 funded an internal corporate research project, called Oak, led by James Gosling, which resulted in a C++ based object-oriented programming language that Sun called Java.

Using Java, you can write programs that will run on a great variety of computer systems and computer-controlled devices. “write once, run anywhere”.

9.4 Java (II)

- Java drew the attention of the business community because of the phenomenal interest in the Internet. It’s now used:

- to develop large-scale enterprise applications,

- to enhance the functionality of web servers (computers that provide the content we see in web browsers),

- to provide applications for consumer devices (cell phones, smart-phones, television set-top boxes,…),

- to develop robotics software and for many other purposes.

9.5 Java (III)

It’s also the key language for developing Android smartphone and tablet apps.

Sun Microsystems was acquired by Oracle in 2010.

Java has become the most widely used general-purpose programming language with more than 10 million developers.

9.6 Java Class Libraries

You can create each class and method you need to form your programs. However, most Java programmers take advantage of the rich collections of existing classes and methods in the Java class libraries, also known as the Java APIs (Application Programming Interfaces).

10 A Typical Java Development Environment

10.1 Five phases (I)

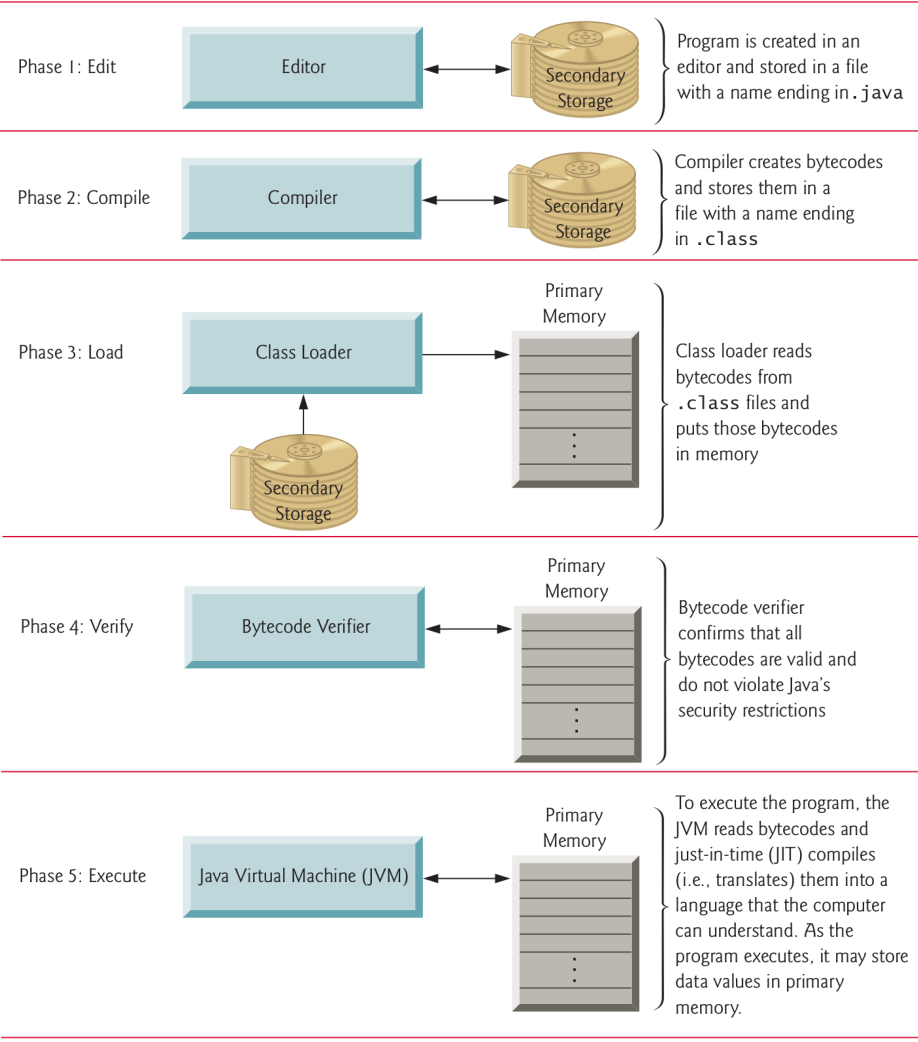

Normally there are five phases to create and execute a Java application:

- edit,

- compile,

- load,

- verify and

- execute

10.2 Five phases (II)

10.3 Phase 1: Creating a Program (I)

10.4 Phase 1: Creating a Program (II)

10.5 Phase 1: Creating a Program (III)

Integrated development environments (IDEs) provide tools that support the software development process, such as editors, debuggers for locating logic errors that cause programs to execute incorrectly and more.

The most popular Java IDEs are:

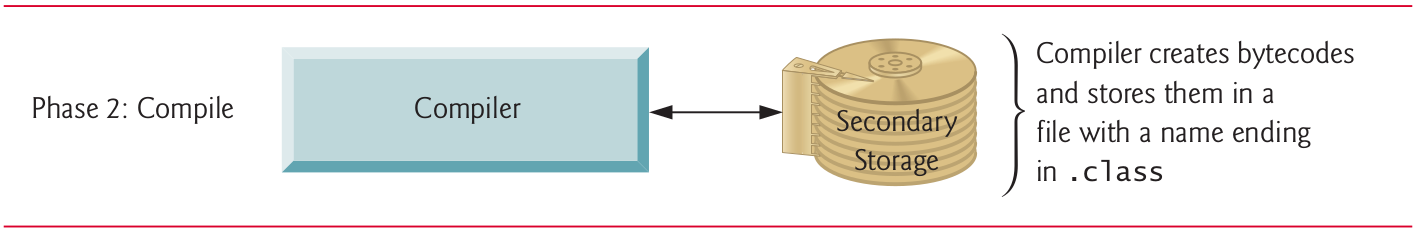

10.6 Phase 2: Compiling a Java Program into Bytecodes (I)

10.7 Phase 2: Compiling a Java Program into Bytecodes (II)

- Use the command javac (Java compiler) to compile a program.

- For example, to compile a program called “

Welcome.java”, you’d type in your system’s command line:

javac Welcome.java- If the program compiles, the compiler produces a .class file called “

Welcome.class”

10.8 Phase 2: Compiling a Java Program into Bytecodes (III)

- IDEs typically provide a menu item, such as Build or Make, that invokes the javac command for you.

- If the compiler detects errors, you’ll need to go back to Phase 1 and correct them.

- Java compiler translates Java source code into bytecodes that represent the tasks to execute (Phase 5).

- The Java Virtual Machine (JVM), a part of the JDK and the foundation of the Java platform, executes bytecodes.

10.9 Phase 2: Compiling a Java Program into Bytecodes (IV)

- A virtual machine (VM) is a software application that simulates a computer but hides the underlying operating system and hardware from the programs that interact with it.

- If the same VM is implemented on many computer platforms, applications written for that type of VM can be used on all those platforms.

- The JVM is one of the most widely used virtual machines. Microsoft’s .NET uses a similar virtual-machine architecture.

10.10 Phase 2: Compiling a Java Program into Bytecodes (V)

Machine-language instructions are platform dependent (dependent on specific computer hardware), bytecode instructions are platform independent.

So, Java’s bytecodes are portable, without recompiling the source code, the same bytecode instructions can execute on any platform containing a JVM that understands the version of Java in which the bytecodes were compiled.

10.11 Phase 2: Compiling a Java Program into Bytecodes (VI)

- The JVM is invoked by the java command. For example:

java WelcomeThis begins Phase 3. IDEs typically provide a menu item, such as Run, that invokes the java command for you.

10.12 Phase 2: Compiling a Java Program into Bytecodes (VII)

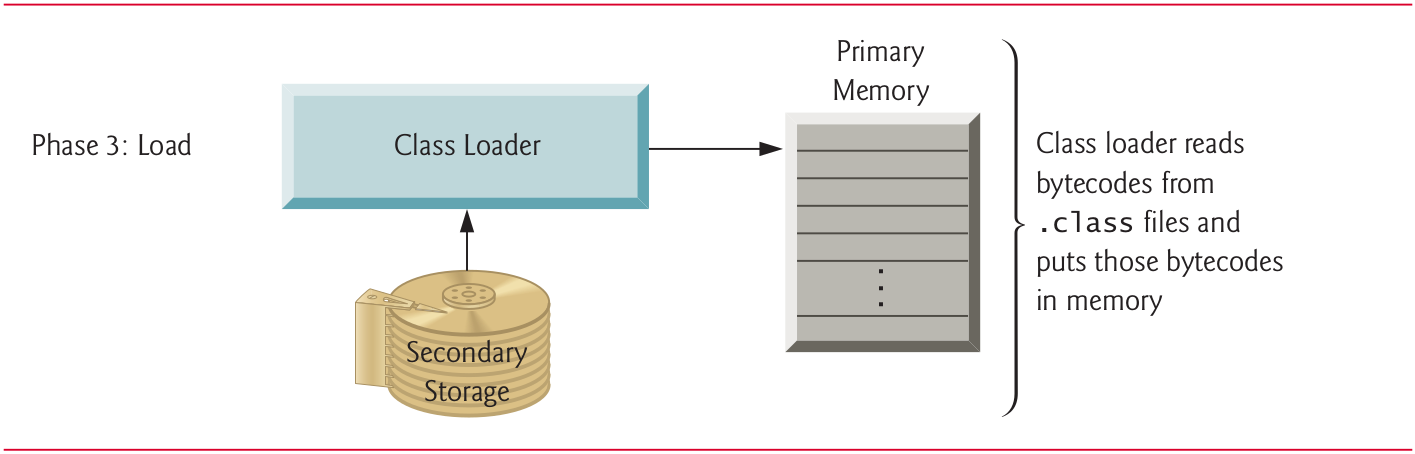

10.13 Phase 3: Loading a Program into Memory (I)

10.14 Phase 3: Loading a Program into Memory (II)

The JVM places the program in memory to execute it, this is known as loading

The JVM’s class loader takes the “

.class” files containing the program’s bytecodes and transfers them to primary memory.Also loads any of the “

.class” files provided by Java that your program uses.The “

.class” files can be loaded from a disk on your system or over a network.

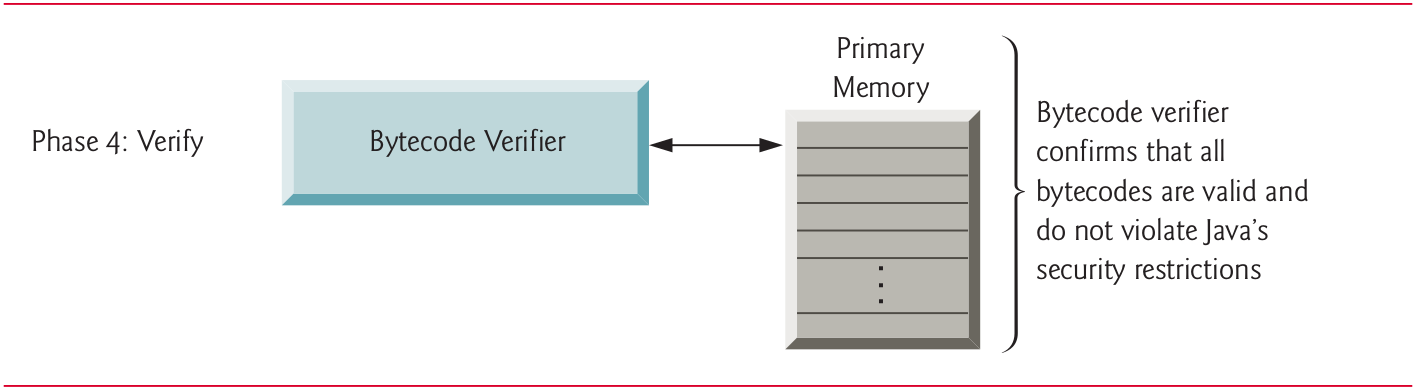

10.15 Phase 4: Bytecode Verification (I)

10.16 Phase 4: Bytecode Verification (II)

In Phase 4, as the classes are loaded, the bytecode verifier examines their bytecodes to ensure that they’re valid and do not violate Java’s security restrictions.

Java enforces strong security to make sure that Java programs arriving over the network do not damage your files or your system (as computer viruses and worms might).

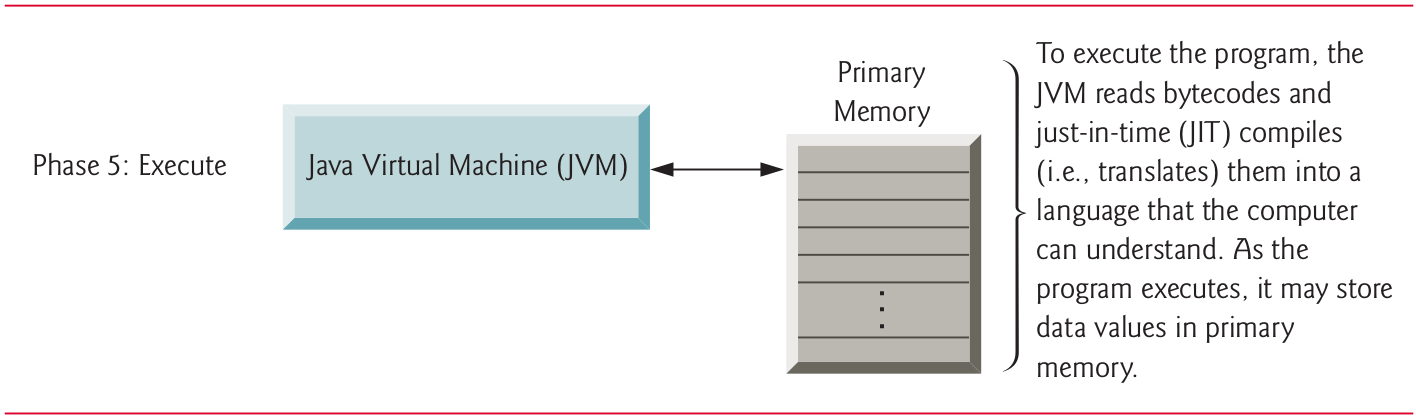

10.17 Phase 5: Execution (I)

10.18 Phase 5: Execution (II)

JVM executes the bytecodes to perform the program’s specified actions.

In early Java versions, the JVM was simply a Java-bytecode interpreter. Most programs would execute slowly, because the JVM would interpret and execute one bytecode at a time. Some modern computer architectures can execute several instructions in parallel.

10.19 Phase 5: Execution (III)

Today’s JVMs typically execute bytecodes using a combination of interpretation and just-in-time (JIT) compilation.

In this process, the JVM analyzes the bytecodes as they’re interpreted, searching for hot spots (bytecodes that execute frequently).

For these parts, a just-in-time (JIT) compiler, such as Oracle’s Java HotSpotTM compiler, translates the bytecodes into the computer’s machine language.

10.20 Phase 5: Execution (IV)

- When the JVM encounters these compiled parts again, the faster machine-language code executes. Thus programs actually go through two compilation phases:

- one in which Java code is translated into bytecodes (for portability across JVMs on different computer platforms)

- and a second in which, during execution, the bytecodes are translated into machine language for the computer on which the program executes.

10.21 Problems That May Occur at Execution Time (I)

10.22 Problems That May Occur at Execution Time (II)

Each of the preceding phases can fail because of various errors.

This would cause the Java program to display an error message.

So, you’d return to the edit phase, make the necessary corrections and proceed through the remaining phases again to determine whether the corrections fixed the problem(s).

10.23 Problems That May Occur at Execution Time (III)

Errors such as division by zero occur as a program runs, so they’re called runtime errors or execution-time errors.

Fatal runtime errors cause programs to terminate immediately without having successfully performed their jobs.

Nonfatal runtime errors allow programs to run to completion, often producing incorrect results.

11 Internet and World Wide Web

11.1 ARPANET (I)

In the late 1960s, ARPA—the Advanced Research Projects Agency of the United States Department of Defense—rolled out plans for networking the main computer systems of approximately a dozen ARPA-funded universities and research institutions.

The computers were to be connected with communications lines operating at speeds on the order of 50,000 bits per second, a stunning rate at a time when most people (of the few who even had networking access) were connecting over telephone lines to computers at a rate of 110 bits per second.

11.2 ARPANET (II)

Academic research was about to take a giant leap forward.

ARPA proceeded to implement what quickly became known as the ARPANET, the precursor to today’s Internet.

Things worked out differently from the original plan.

Although the ARPANET enabled researchers to network their computers, its main benefit proved to be the capability for quick and easy communication via what came to be known as electronic mail (e-mail).

11.3 ARPANET (III)

This is true even on today’s Internet, with e-mail, instant messaging, file transfer and social media such as Facebook and Twitter enabling billions of people worldwide to communicate quickly and easily.

The protocol (set of rules) for communicating over the ARPANET became known as the Transmission Control Protocol (TCP). TCP ensured that messages, consisting of sequentially numbered pieces called packets, were properly routed from sender to receiver, arrived intact and were assembled in the correct order.

11.4 Internet: A Network of Networks (I)

In parallel with the early evolution of the Internet, organizations worldwide were implementing their own networks for both intraorganization (that is, within an organization) and interorganization (that is, between organizations) communication.

A huge variety of networking hardware and software appeared. One challenge was to enable these different networks to communicate with each other.

11.5 Internet: A Network of Networks (II)

ARPA accomplished this by developing the Internet Protocol (IP), which created a true “network of networks”, the current architecture of the Internet.

The combined set of protocols is now called TCP/IP. Each Internet-connected device has an IP address—a unique numerical identifier used by devices communicating via TCP/IP to locate one another on the Internet.

11.6 Internet: A Network of Networks (III)

Businesses rapidly realized that by using the Internet, they could improve their operations and offer new and better services to their clients.

Companies started spending large amounts of money to develop and enhance their Internet presence. This generated fierce competition among communications carriers and hardware and software suppliers to meet the increased infrastructure demand.

As a result, bandwidth—the information-carrying capacity of communications lines—on the Internet has increased tremendously, while hardware costs have plummeted.

11.7 World Wide Web: Making the Internet User-Friendly (I)

The World Wide Web (simply called “the web”) is a collection of hardware and software associated with the Internet that allows computer users to locate and view documents (with various combinations of text, graphics, animations, audios and videos) on almost any subject.

In 1989, Tim Berners-Lee of CERN (the European Organization for Nuclear Research) began developing HyperText Markup Language (HTML)—the technology for sharing information via “hyperlinked” text documents.

11.8 World Wide Web: Making the Internet User-Friendly (II)

Berners-Lee also wrote communication protocols such as HyperText Transfer Protocol (HTTP) to form the backbone of his new hypertext information system, which he referred to as the World Wide Web.

In 1994, he founded the World Wide Web Consortium W3C devoted to developing web technologies. One of the W3C’s primary goals is to make the web universally accessible to everyone regardless of disabilities, language or culture.

11.9 Web Services and Mashups (I)

The applications-development methodology of mashups enables you to rapidly develop powerful software applications by combining (often free) complementary web services and other forms of information feeds.

ProgrammableWeb provides a directory of over 16,500 APIs and 6,300 mashups. Their API University includes how-to guides and sample code for working with APIs and creating your own mashups. According to their website, some of the most widely used APIs are Facebook, GoogleMaps, Twitter and YouTube.

11.10 Web Services and Mashups (II)

| Web services source | How it’s used |

|---|---|

| Google Maps | Mapping services |

| Microblogging | |

| YouTube | Video search |

| Social networking | |

| Photo sharing | |

| Social networking for business | |

| PayPal | Payments |

11.11 Internet of Things

- The Internet is no longer just a network of computers—it’s an Internet of Things (IoT).

A thing is any object with an IP address and the ability to send data automatically over the Internet. Such things include:

- a car with a transponder for paying tolls,

- a heart monitor implanted in a human,

- smart thermostats that adjust room temperatures based on weather forecasts and activity in the home

- and many more.

12 Software Technologies

12.1 Technologies (I)

List of buzzwords that you’ll hear in the software development community:

- Agile software development is a set of methodologies that try to get software implemented faster and using fewer resources (Agile Alliance, the Agile Manifesto).

- Refactoring involves reworking programs to make them clearer and easier to maintain while preserving their correctness and functionality. It’s widely employed with agile development methodologies. Many IDEs contain built-in refactoring tools to do major portions of the reworking automatically.

12.2 Technologies (II)

- Design patterns are proven architectures for constructing flexible and maintainable object-oriented software. The field of design patterns tries to enumerate those recurring patterns, encouraging software designers to reuse them to develop better-quality software using less time, money and effort.

- LAMP is an acronym for the open-source technologies that many developers use to build web applications—it stands for Linux, Apache, MySQL (or MariaDB) and PHP (or Perl or Python—two other popular scripting languages). WAMP for Windows.

12.3 Technologies (III)

- Software has generally been viewed as a product. With Software as a Service (SaaS), the software runs on servers elsewhere on the Internet. When that server is updated, all clients worldwide see the new capabilities (no local installation is needed). You access the service through a browser.

- Platform as a Service (PaaS) provides a computing platform for developing and running applications as a service over the web, rather than installing the tools on your computer. Some PaaS providers are Google App Engine, Amazon EC2 and Windows Azure.

12.4 Technologies (IV)

- SaaS and PaaS are examples of cloud computing. Software and data stored in the “cloud” allows you to increase or decrease computing resources which is more cost effective than purchasing hardware to provide enough storage and processing power to meet occasional peak demands. Cloud computing also saves money by shifting to the service provider the burden of managing these apps (installing and upgrading the software, security, backups and disaster recovery).

- Software Development Kits (SDKs) include tools and documentation developers use to program applications.

12.5 Software product-release terminology (I)

Software is complex. Large, real-world software applications can take many months or even years to design and implement.

When large software products are under development, they typically are made available to the user communities as a series of releases, each more complete and polished than the last.

12.6 Software product-release terminology (II)

- Alpha software is the earliest release of a software product that’s still under active development. Alpha versions are often buggy, incomplete and unstable and are released to a relatively small number of developers for testing new features, getting early feedback, etc. Alpha software also is called early access software.

- Beta versions are released to a larger number of developers later in the development process after most major bugs have been fixed and new features are nearly complete. Beta software is more stable, but still subject to change.

12.7 Software product-release terminology (III)

- Release candidates are generally feature complete, (mostly) bug free and ready for use by the community, which provides a diverse testing environment.

- Final Release: any bugs that appear in the release candidate are corrected, and eventually the final product is released to the general public. Software companies often distribute incremental updates over the Internet.

- Continuous Beta: software that’s developed using this approach (i.e., Google search or Gmail) generally does not have version numbers. It’s hosted in the cloud and is constantly evolving (users have the latest version).

13 Getting Your Questions Answered

13.1 Online forums

There are many online forums in which you can get your Java questions answered and interact with other Java programmers. Some popular Java and general programming forums include: